Pure Python Machine Learning Module: Least Squares Class

Find it on GitHub

Overview

In the last post, we covered the complete math to working python code for the Least Squares Regression method. This post simply takes the least squares code from the last post and puts it into a clean class structure and stores it in a module that we will grow over time with other machine learning tools. Classes, when used well (and I hope that I am using them well), provide great benefits. Also, starting this machine learning module now will make the upcoming posts much easier to understand and to use.

The Module Code

I am never quite sure what’s the best way to present code to general audiences, but I try to present it in a way that’s easiest to digest. I’ll present the entire module first, and then repeat blocks of it with more detailed explanations.

It maybe, that with the documentation in the code, you won’t need to go through the rest of the post after looking thru the complete module code below. If that’s the case, I hope you will then visit the closing remarks at the bottom. As always, I hope you’ll get the code and make it your own. I can easily imagine many people wanting to do things a bit differently from the way that I have done them.

The ENTIRE NEW INITIAL Machine Learning Module

I trust that the documentation in the module’s code will give you a good first overview or better. I’ll attempt to anticipate and cover any potential points of confusion in the code discussion sections below this section.

import LinearAlgebraPurePython as la

class Least_Squares:

def __init__(self, fit_intercept=True, tol=0):

"""

class structure for least squares regression

without machine learning libraries

:param fit_intercept=True: If the data that

you send in does not have a column of 1's

for fitting the y-intercept, it will be

added by default for you. If your data has

a column of 1's, set this parameter to False

:param tol=0: This is a tolerance check used in

the solve equations function in the

LinearAlgebraPurePython module

"""

self.fit_intercept = fit_intercept

self.tol = tol

def __format_data_correctly(self, D):

"""

Private function used to make sure data is formatted

as needed by the various functions in the procedure;

this allows more flexible entry of data

:param D: The data structure to be formatted;

assures the data is a list of lists

:returns: Correctly formatted data

"""

if not isinstance(D[0],list):

return [D]

else:

return D

def __orient_data_correctly(self, D):

"""

Private function to ensure data is oriented

correctly for least squares operations;

This allows more flexible entry of data

:param D: The data structure to be

oriented; want more rows than columns

:returns: Correctly oriented data

"""

if len(D) < len(D[0]):

return la.transpose(D)

else:

return D

def __condition_data(self, D):

"""

Private function to format data in

accordance with the previous two private functions

:param D: The data

:returns: Correctly conditioned data

"""

D = self.__format_data_correctly(D)

D = self.__orient_data_correctly(D)

return D

def __add_ones_column_for_intercept(self, X):

"""

Private function to append a column of 1's

to the input matrix

:param X: The matrix of input data

:returns: The input matrix with a column

of 1's appended to the front of it

"""

for i in range(len(X)):

X[i] = [1.0] + X[i]

return X

def fit(self, X, Y):

"""

Callable method of an instance of this class

to determine a set of coefficients for the

given data

:param X: The conditioned input data

:param Y: The conditioned output data

"""

# Section 1: Condition the input and output data

self.X = self.__condition_data(X)

self.Y = self.__condition_data(Y)

# Section 2: Append a column of 1's unless the

# the user knows this is NOT necessary

if self.fit_intercept:

self.X = self.__add_ones_column_for_intercept(self.X)

# Section 3: Transpose the data into the null

# space of the X matrix using the transpose of X

# and solve for the coefficients using our general

# solve equations function

AT = la.transpose(self.X)

ATA = la.matrix_multiply(AT, self.X)

ATB = la.matrix_multiply(AT, self.Y)

self.coefs = la.solve_equations(ATA,ATB,tol=self.tol)

def predict(self, X_test):

"""

Condition the test data and then use the existing

model (existing coefficients) to solve for test

results

:param X_test: Input test data

:returns: Output results for test data

"""

# Section 1: Condition the input data

self.X_test = self.__condition_data(X_test)

# Section 2: Append a column of 1's unless the

# the user knows this is NOT necessary

if self.fit_intercept:

self.X_test = self.__add_ones_column_for_intercept(

self.X_test)

# Section 3: Apply the conditioned input data to the

# model coefficients

return la.matrix_multiply(self.X_test, self.coefs)

Since the above full copy of the module is documented in detail, and since we will be discussing the blocks of it below, and since the code in the repo has the same “in code” documentation, the “in code documentation” for the repeated code blocks below will be eliminated or reduced to save space.

Imports and __init__

The one import is of the linear algebra library in pure python that was grown in work covered by previous blog posts. I’ll provide quick links to those posts below this code block. This module is handy, because it’s truly the most basic operations we need for linear algebra work, and it will help to make this current least squares regression class and future classes much cleaner by helping us to NOT repeat commonly and frequently needed code.

Often times, I could imagine that people, myself included, would not want to manually enter a column of 1’s for fitting the y intercept for the regression model. Thus, the default for this class is to add that necessary column of 1’s for the user automatically. When using the polynomial class in a soon to be released post, the 1’s column will also be added automatically by that class, and so when passing polynomial input data into an instance of this class, the fit_intercept Boolean should be set to False.

Some people reading this may prefer to add an auto detect feature to check for a column of 1’s. I’d personally prefer to do that, but I felt it best to leave that as an exercise to those that want that type of functionality.

The desire to have a tolerance parameter was covered in the posts for matrix inversion and solving a system of equations. Links to those posts are below this code block.

import LinearAlgebraPurePython as la

class Least_Squares:

def __init__(self, fit_intercept=True, tol=0):

self.fit_intercept = fit_intercept

self.tol = tol

Here are those quick links to previous posts that you may want to go thru to help better understand how and why we arrived at this post:

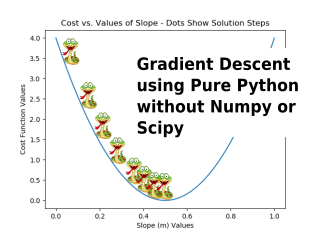

- Least Squares: Math to Pure Python without Numpy or Scipy

- Find the Determinant of a Matrix with Pure Python without Numpy or Scipy

- BASIC Linear Algebra Tools in Pure Python without Numpy or Scipy

- Solving a System of Equations in Pure Python without Numpy or Scipy

Private Convenience Functions

The next block of code includes the private functions that help with general house keeping tasks of formatting and conditioning the data as needed by the least squares steps AND to allow users to enter the data in easier ways.

- __format_data_correctly simply makes sure that data used by the model is a list of lists (i.e. matrix style).

- __orient_data_correctly ensures that the data is oriented correctly, meaning that there should be more rows, or as many rows, as there are columns. If there are more columns than rows, we return the transpose of that data.

- __condition_data is a mere convenience function to call each of the previous functions. I could understand someone wanting to “remove” this function out of their version of the code.

- __add_ones_column_for_intercept simply appends a column of 1’s to the front of the input data matrix IF needed.

def __format_data_correctly(self, D):

if not isinstance(D[0],list):

return [D]

else:

return D

def __orient_data_correctly(self, D):

if len(D) < len(D[0]):

return la.transpose(D)

else:

return D

def __condition_data(self, D):

D = self.__format_data_correctly(D)

D = self.__orient_data_correctly(D)

return D

def __add_ones_column_for_intercept(self, X):

for i in range(len(X)):

X[i] = [1.0] + X[i]

return X

The “fit” and “predict” Functions

Thanks to our previous work stored in the imported module LinearAlgebraPurePython, and the above convenience functions, these most important functions in the next code block below are very clean.

First, the “fit” function:

- fit – Section 1: Conditions our input and output training data as described above.

- fit – Section 2: Adds a column of 1’s to the input data if needed, and the default is to automatically add this column, so if you enter a column of 1’s ahead of time for the y-intercept, you will want to set the Boolean for adding a column of 1’s to False when instantiating the class.

- fit – Section 3: Apply the math, using code, from the Least Square blog post. In summary, the transpose of the input data applied to the input and output training data enables us to solve for the best fit of a line thru our training data.

- The model IS essentially the coefficients that come from the solution and are stored as <instance_name>.coefs, which can be printed, or passed elsewhere, if so desired.

Second, the “predict” function:

- predict – Section 1: Condition the data as necessary like was done in fit above.

- predict – Section 2: Appends the column of 1’s to the input data matrix if necessary.

- predict – Section 3: Apply the current coefficients (the fitted model that is) to the test data to predict output test data.

def fit(self, X, Y):

# Section 1: Condition the input and output data

self.X = self.__condition_data(X)

self.Y = self.__condition_data(Y)

# Section 2: Append a column of 1's ... or not

if self.fit_intercept:

self.X = self.__add_ones_column_for_intercept(self.X)

# Section 3: Transpose data into null space and solve

AT = la.transpose(self.X)

ATA = la.matrix_multiply(AT, self.X)

ATB = la.matrix_multiply(AT, self.Y)

self.coefs = la.solve_equations(ATA,ATB,tol=self.tol)

def predict(self, X_test):

# Section 1: Condition the input data

self.X_test = self.__condition_data(X_test)

# Section 2: Append a column of 1's ... or not

if self.fit_intercept:

self.X_test = self.__add_ones_column_for_intercept(

self.X_test)

# Section 3: Apply the model coefficients

return la.matrix_multiply(self.X_test, self.coefs)

All of the test cases used in the Least Squares: Math to Pure Python without Numpy or Scipy post and repository are repeated in the repository for this new module. To keep things cleaner, they’ve been placed inside a Least_Squares_Practice folder along with the pure python Linear Algebra and Machine Learning modules for ease of import by these test files. This way, as new classes are added, separate practice folders can be added for “practice applications of those classes” with a minimum machine learning module for that practice folder. I hope you’ll clone the repo and run the practice files using python 3.x.

One important noted alteration between this and the previous post is that the first practice file has been broken into two parts as follows:

- LeastSquaresPractice_1a.py applies data for training WITHOUT a 1’s column in the input data to fit the y intercept coefficient.

- LeastSquaresPractice_1b.py applies data for training WITH a 1’s column in the input data to fit the y intercept coefficient.

Closing

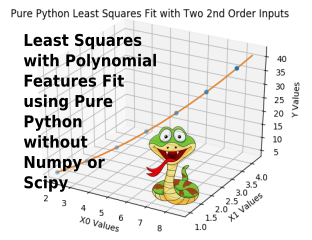

With all our previous work in developing our pure python linear algebra module and our pure python machine learning module, we are ready to build some more complicated machine learning applications for further insight. The next post will cover how to produce polynomial inputs for any order of polynomial AND for any number of input variables, and we will be able to use that as an input to ANY of our future regression modeling methods when we want to try polynomial regression model fitting of order 2 or greater (actually, it even works for first order and can be used as another way to append a column of 1’s to the input matrix).

Until next time …